TL;DR

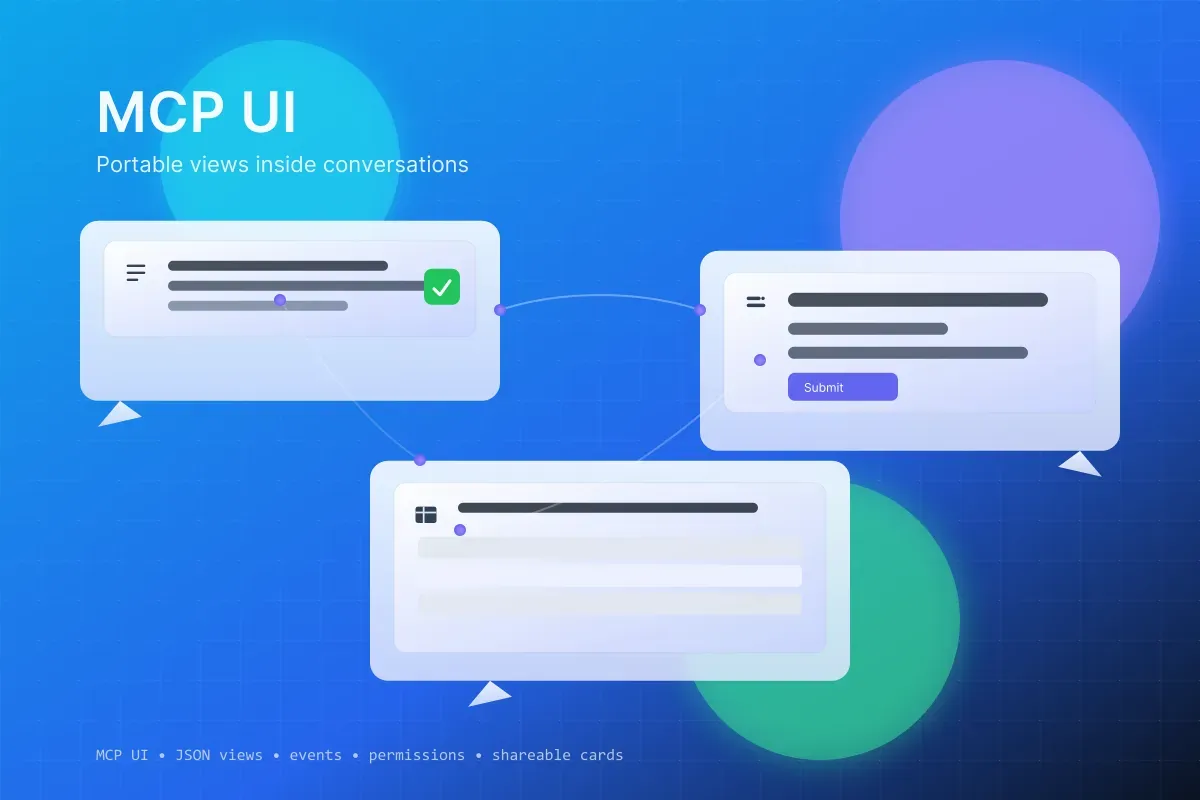

- MCP turned tools and data sources into a universal capability layer for AI agents. An emerging UI profile (“MCP UI”) can do the same for interfaces.

- With MCP UI, apps expose declarative, portable views that render inside any compliant chat client, no iFrames, no one-off plugins.

- The result is a web-by-reference: linkable, permissioned, stateful UI that flows through conversations instead of tabs.

- Developers ship one capability that works across assistants. Users stay in the chat while completing multi-step tasks with real UI, not wall-of-text.

A quick refresher: What is MCP?

The Model Context Protocol (MCP) standardises how AI clients (LLMs, agents, chat apps) discover and call server-side capabilities, tools, data sources, and events through a language-agnostic, transport agnostic protocol. Think “USB for AI tools”: enumerate, describe, call, stream.

Today, most MCP integrations are headless: the assistant calls a tool, then describes results back to the user as text. That works, but it’s clumsy for multistep workflows that need forms, tables, previews, and confirmations.

Enter an emerging idea: an interoperable UI surface for MCP.

What would “MCP UI” standardise?

At minimum, a UI profile for MCP would define:

- View model schema

- A small, declarative component vocabulary (form, list, table, details, media, steps, modal, toast) described as JSON.

- Render hints (compact, dense, read-only), affordances (copy, download), and accessibility semantics.

- Event contract

- Standard UI events (submit, change, select, navigate, open-link) mapped to MCP tool invocations or subscriptions.

- Streaming and progressive updates for long-running calls.

- State and identity

- Session-scoped state shared between the chat thread and the capability (think conversational navigation).

- Identity/permissions handshake so the UI can request scopes at interaction time (e.g., “Read calendar”, “Write PR comments”).

- Linking and shareability

- Deep-linkable views with stable IDs so you can reference “the table you showed me earlier, row 3”.

- Copy/paste across clients: a view rendered in Client A should reconstruct in Client B from its JSON payload.

- Security envelope

- Sandboxed rendering (no arbitrary script from servers), strict data-only contracts.

- Content provenance and redaction controls for sensitive fields.

If this sounds like HTML but for conversations, you’re not far off.

Why embed UI in chat at all?

- Reduced context switching: Users stay in flow. No auth popups or tab juggling.

- Trust-through-consistency: Common controls and permission prompts across assistants.

- Better correctness: Forms and previews reduce ambiguous, lossy natural-language back-and-forth.

- Collaboration: A single chat thread becomes a multiplayer surface with shared state and auditability.

A mental model: the conversational SPA

Picture a chat thread as a minimal shell. Messages are narrative. MCP UI views are the panels that appear inline. The assistant orchestrates:

- Discovery: “I can schedule events” → presents a schedule form.

- Data: Tool returns a table of available slots → paginated list with filters.

- Action: User selects, grants calendar scope → tool executes, UI updates with a confirmation card.

- Share: Copy the confirmation card to another channel; it re-hydrates in their client.

All of this is serializable as JSON views and events, with no bespoke plugin per client.

Example: Booking a meeting inside chat

- User: “Find a 30-minute slot with Jane next week and invite the design team.”

- Assistant: calls

scheduler.searchSlotsvia MCP. - Tool returns both data and a UI view:

{

"type": "table",

"id": "slots#week37",

"columns": ["Day", "Start", "End", "Attendees"],

"rows": [

["Tue", "10:00", "10:30", ["Jane"]],

["Wed", "14:00", "14:30", ["Jane", "Alex"]]

],

"actions": [

{

"label": "Schedule this",

"event": "select-row"

},

{

"label": "Filter",

"event": "open-filter"

}

]

}- User clicks a row → client emits

select-rowwith the selected index → assistant callsscheduler.schedule. - Tool requests permission

calendar:writevia the standardized scope prompt. - Confirmation card renders with a deep link to the created event and a “Share to channel” action.

No browser hop required. The UI is portable, accessible, and composable.

How this changes the web’s shape

- Pages become Capabilities: Instead of shipping an entire page, you expose a handful of capabilities each with declarative views.

- SEO → AEO (Assistant Experience Optimisation): You optimise capability manifests and view descriptors so assistants can rank, test, and recommend them.

- Links become View Intents:

mcp://vendor.app/capability#view=details&id=123rather thanhttps://…. Clients can resolve intents locally or via the network. - Analytics get healthier: Event streams are explicit (submit/select) instead of inferred with brittle click tracking.

Developer ergonomics

- One integration, many clients: Implement MCP once, ship UI as JSON; render across desktop chat, mobile chat, IDE agent, or voice-first assistants.

- Testability: Views are pure data → snapshot test them, fuzz inputs, validate against a published schema.

- Progressive disclosure: Start headless; add MCP UI for steps that benefit from structure.

A minimal server shape (illustrative TypeScript)

// pseudo-code: an MCP server that exposes a search capability with a UI view

export async function searchDocs(query: string) {

const results = await docs.index.search(query);

return {

data: results, // raw for programmatic use

view: {

type: "list",

id: `search:${hash(query)}`,

items: results.slice(0, 10).map((r) => ({

title: r.title,

subtitle: r.snippet,

action: { label: "Open", event: "open", value: r.id },

})),

page: { size: 10, total: results.length },

},

} as const;

}The client renders view verbatim and wires open events back to the capability.

Governance and standards

To work, MCP UI needs:

- A cross-vendor component vocabulary with strict accessibility and i18n guarantees.

- A versioned JSON schema and conformance tests.

- A clear permission model and audit events.

- A registry/discovery story (like a

capabilities.json) akin torobots.txt/well-knownin the web.

Expect early vendor-specific components, followed by a convergence toward a small, boring standard set, just like HTML’s core tags.

Risks and open questions

- Fragmentation: If each client invents its own components, portability suffers.

- Security: Rendering third-party views must be data-only and sandboxed.

- UX overload: Chats full of widgets could become noisy; defaults should prefer summarisation with progressive reveal.

- Offline: How much should clients cache views and rehydrate when tools are unavailable?

What you can do today

- Design capabilities, not pages: Slice workflows into callable tools with clear inputs/outputs.

- Return structured results: Even before UI standardisation, ship JSON that clients (or your own) can render.

- Treat views as artefacts: Add IDs, make them linkable, and think about how they are referenced across a conversation.

- Be explicit about permissions: Model scopes per action; make consent granular and revocable.

The punchline

MCP connected assistants to the world’s capabilities. MCP UI brings those capabilities to where people already are: the conversation. If we get the contract right/small, accessible, secure, the new web won’t replace chat, and chat won’t replace the web. They’ll merge, with conversations as the routing layer and portable views as the UX.